Privacy Challenges in the Age of AI

Artificial Intelligence (AI) has changed our lives in many great ways. It offers personalised online experiences, improves healthcare predictions, powers automated transport, and helps with smart home assistants. But with this quick progress, there are many worries about how we collect, use, and protect our data. As AI becomes a bigger part of our daily lives, questions about AI privacy, data ethics, and digital security become more pressing.

In the age of AI, privacy is not simply about securing passwords or hiding your location. It encompasses a broader landscape where algorithms can track behaviour, predict intentions, and make decisions that impact individuals and communities—often without our knowledge or consent.

This article looks at the main privacy challenges from AI technologies. It discusses the ethical issues they create. It also examines how individuals, businesses, and governments can find a balance between innovation and protection.

The AI–Privacy Paradox

AI systems thrive on data. The more data they take in, the better they can spot patterns and predict outcomes. This creates a key paradox: to gain from smart services, we must give up more personal information.

Examples of AI-driven data collection:

- Voice assistants listening for commands (and sometimes more)

- Facial recognition systems monitoring public spaces

- Targeted advertising based on browsing history and preferences

- Predictive policing using crime and location data

- Health tracking apps that collect biometric and behavioural data

In each case, the question becomes: who controls this data, and how is it used?

Key Privacy Challenges in the AI Era

1. Informed Consent and Data Transparency

Many AI systems operate on data users didn’t explicitly agree to share. Terms of service are often long and hard to understand. This leads to users agreeing to invasive practices without realising it.

Challenge: Making clear consent models that show what data is collected, why it’s collected, and how long it will be kept.

Ethical consideration: Should companies be allowed to collect “inferred” data—predictions about personality, preferences, or health based on usage patterns?

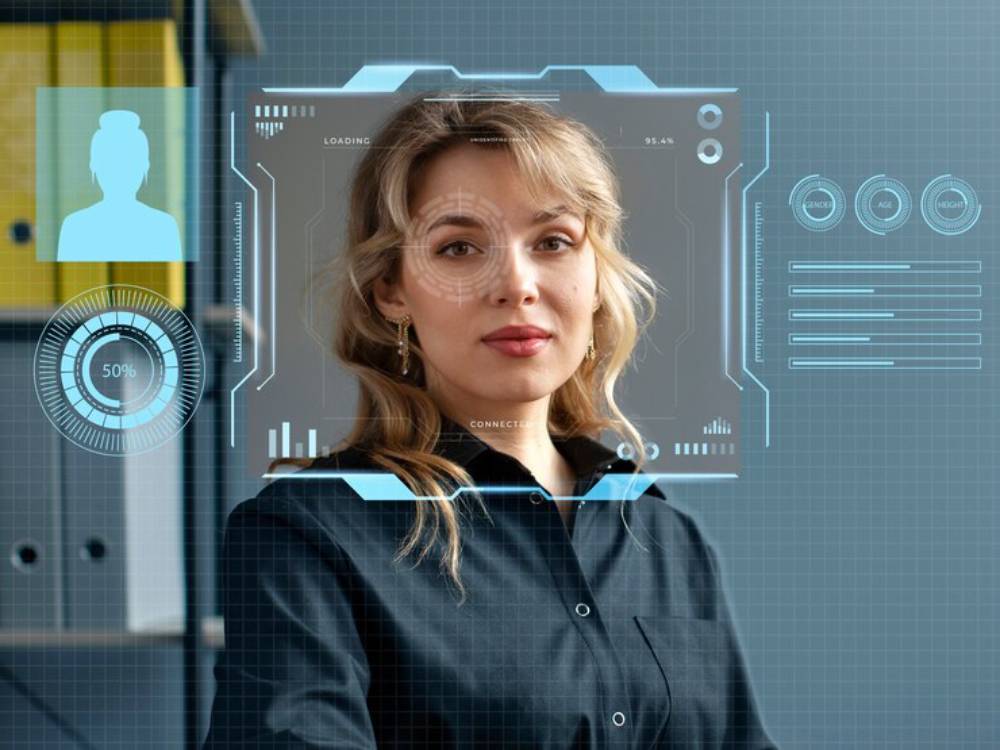

2. Surveillance and Facial Recognition

Governments and private companies are using facial recognition more often. You can find it in public spaces, airports, retail stores, and schools. These systems can track movement, identify individuals, and create behavioural profiles.

Risks:

- Misidentification, especially among minority groups

- Erosion of anonymity in public spaces

- Lack of regulation or public oversight

Facial recognition creates big privacy worries with AI. This is especially true when people cannot opt out.

3. Bias and Discrimination in AI Systems

Algorithms that learn from biased data often repeat and worsen those biases. This can result in unfair treatment based on race, gender, age, or socioeconomic status.

Real-world consequences:

- Biased hiring algorithms screening out qualified candidates

- Credit scoring tools that disadvantage certain groups

- Discriminatory policing practices based on flawed predictive data

Biased AI isn’t a breach of privacy, but it often stems from using personal data without proper safeguards. This highlights the link between data ethics and digital security.

4. Data Security and Breaches

The more personal data AI systems store and process, the higher the risk of cyberattacks, leaks, or misuse.

Common vulnerabilities:

- Poorly secured databases

- Insider threats

- Lack of encryption

- Insufficient access controls

A breach of AI-managed data can have far-reaching consequences—especially when that data includes biometric identifiers or behavioural profiles.

5. Lack of Regulation and Accountability

AI technology is advancing faster than policy can keep up. In many areas, there is no clear law on how AI manages data. This leads to inconsistencies and gaps in protection.

Challenges:

- Determining who is responsible when AI systems cause harm

- Regulating cross-border data flows in global platforms

- Creating standards that protect both innovation and privacy

Without accountability frameworks, users are left vulnerable. This lack of oversight also weakens trust in digital systems.

Global Efforts to Protect AI Privacy

Governments and institutions all over the world are now responding to the privacy concerns raised by AI.

1. GDPR (General Data Protection Regulation – EU)

- Emphasises data minimisation, purpose limitation, and user consent

- Grants individuals rights to access, correct, or delete their data

- Holds organisations accountable for data misuse or breaches

2. California Consumer Privacy Act (CCPA)

- Gives consumers control over their personal data

- Requires businesses to disclose data collection practices

- Allows users to opt out of data sales

3. OECD AI Principles and UNESCO Guidelines

- Promote trustworthy, human-centric AI

- Encourage transparency, fairness, and accountability

Even with these initiatives, there is still no cohesive global framework. Many countries still lack strong data protections for AI.

Ethical Frameworks in AI Development

Tech companies and researchers are feeling more pressure. So, they are adopting ethical principles to guide AI development. These typically include:

- Fairness: Avoiding bias and ensuring equitable outcomes

- Transparency: Making AI decisions understandable and explainable

- Accountability: Assigning responsibility when things go wrong

- Privacy by design: Embedding data protection into the development process

Yet, without legal enforcement, ethical codes often remain aspirational rather than actionable.

Balancing Innovation and Privacy

While the risks are real, AI also has immense potential to improve lives. The goal is not to halt progress but to steer it responsibly.

Strategies for achieving balance:

- Data minimisation: Only collect what’s necessary

- Anonymisation and encryption: Protect data at rest and in transit

- User control: Offer clear choices and meaningful opt-outs

- Audits and impact assessments: Regularly evaluate AI systems for privacy and bias

- Public education: Empower individuals to understand and manage their data

Following these principles creates a digital space that values both innovation and rights.

What Individuals Can Do

Even without sweeping policy changes, users can take steps to protect their own data in the age of AI.

Practical Tips:

- Review app permissions and limit access to data where possible

- Use privacy-focused tools like encrypted messaging and VPNs

- Opt out of data sharing and personalised ads when available

- Read privacy policies (or summaries) to understand your rights

- Support organisations advocating for digital rights and regulation

Increasing awareness and pushing companies for better standards can drive change effectively.

The Road Ahead: Designing AI for Privacy

In the future, developers, policymakers, and civil society need to work together. They should create AI technology that benefits everyone while respecting individual rights.

Promising innovations:

- Federated learning: A system where AI models learn from local data without moving it to a central server—improving privacy and security

- Explainable AI (XAI): Making algorithmic decisions more transparent and accountable

- Differential privacy: It adds statistical “noise” to data. This helps keep individual identities safe while still gaining useful overall insights.

These approaches, along with solid policy, can create a future where AI and privacy work well together.

Protecting Privacy in an Intelligent World

The rise of AI brings incredible promise—but also significant responsibility. As systems get smarter and are more part of our lives, protecting personal data has to come first.

AI privacy, data ethics, and digital security are more than technical issues. They are about human values like dignity, autonomy, and trust.

In the coming years, we must make sure AI grows without hurting privacy. We can do this by focusing on smart design, clear policies, and giving users more control.

Act now: Check your privacy settings, ask how your data is used, and back stronger regulations. In the age of smart machines, it’s crucial to protect what makes us human.